Host Machine Data Flow Considerations

1. Overview

One key performance parameter of our SDRs is their high data throughput - the rate at which data can be transferred between the application running on the host system, and SDR used to transmit and receive the corresponding radio signal. This throughput is enabled by the FPGA, optical transceiver, optical fiber, and the network interface card (NIC) used to interface with the host system. This wide-band Ethernet connection between the SDR and host system uses SFP+ 10Gbps data links for Crimson and qSFP+ 40-100Gbps data links for Cyan. In the host system, a number of factors are also important for sustaining high data throughput, particularly physical bus links between different core components.

First, in subsections 1.1 - 1.2., we discuss how the receive (Rx) and transmit (Tx) system passes data between the host system and FPGA. In sections 2.1 - 2.7, we discuss challenges, considerations and limitations regarding the various devices and protocols used in the host system that communicates with the SDR. This involves discussions around the SDR 10GBASE-R/40GBASE-R/100GBASE-R encoded transceiver and host NIC, CPU, RAM and hard drives. In sections 3.1-3.6, we discuss recommendations for a host system when using our SDR for transmit (Tx) and receive (Rx) functionalities. Finally, in section 4 we discuss an example of high-speed streaming of data from a host system from a file at 1GBps.

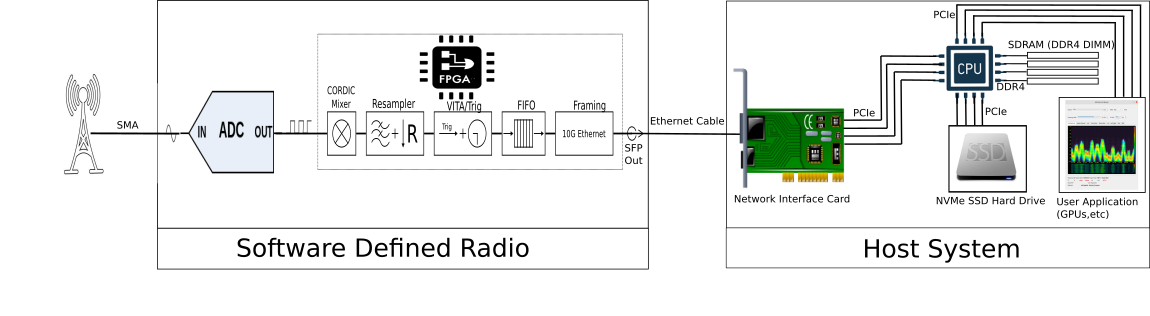

1.1. Receive (Rx) System

For Rx functionality (i.e. streaming RF data to disk), as shown in Figure 1, once the host system initiates sending (using flow control), the signal at the ADC is digitized and sent to the FPGA (using a JESD204B bus), processed, and encapsulated (VITA49/Ethernet framing) in the FPGA, and is sent to the host system using Ethernet over SFP+/qSFP+ cables.

As an example, consider an application that demodulates FM RF signals, while saving the resulting RF data to a file, as shown in Figure 1. The following RF and data flows would occur:

- RF energy incident on the antenna is captured and the signal is sent over SMA to the SDR

- This RF signal is sampled by the SDR (through the ADC) and digitized into RF data, and sent to the FPGA over a JESD204B/C interface.

- This RF data is encapsulated and transmitted over Ethernet network cable.

- The host system NIC receives ethernet packet and buffers packets within the NIC itself.

- The host system’s CPU copies data from the NIC over a PCIe Bus, through the CPU, to a SDRAM memory (over DDR4 bus) and informs the application.

- The application passes memory to CPU (over DDR4) and the CPU modifies the data and returns it to the memory (over DDR4).

- Application asks CPU to save memory to a file. The CPU reads memory (over DDR4), and writes contents to drive (either using PCIe if NVMe or SATA/SAS if other).

Figure 1:Rx Data Flow Through SDR and Host System

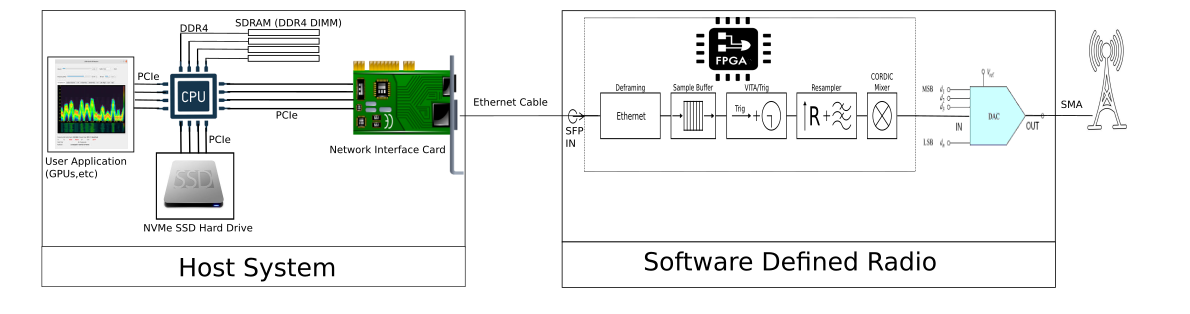

1.2. Transmit (Tx) System

During transmit (Tx) functionality (i.e. streaming contents from a file to the SDR for transmission), as shown in Figure 2, the digitized signal is sent from the host system NIC over Ethernet to the SDRs FPGA. For proper Tx performance, it is important to ensure high data rates are sustained over the Ethernet SFP/qSFP+ connection. Essentially, the interaction between the host system and SDR during Tx is to send data over Ethernet until the appropriate buffer level is reached (as discussed in the flow control ). At this point, the host system will stop sending data until the time to start command occurs, at which steady state flow control engages to send data at a rate that matches the target buffer level.

Figure 2:Tx Data Flow Through SDR and Host System

As an example, consider an application that reads data from a host system file, passes it to the SDR, and transmits this signal over an antenna as shown in Figure 2. The following RF and data flows would occur:

- The application asks the CPU to read the file from the hard drive, and passes data from the file to the memory (over DDR4) where the CPU has faster access to it.

- The CPU copys the data from the memory and passes it over a PCIe bus to the NIC on a continuous basis as it fills the SDR sample buffer.

- When the sample buffer is filled to ~70%, the host system will stop sending data until the time to start command occurs.

- At this point, the FPGA performs DSP/sends data from the sample buffer to the ADC (using JESD).

- As the sample buffer on the FPGA decreases, control packets inform the host system to again fill the sample buffers using step 2.

- This will occur until the entire file being transmitted has been read from the host system.

2. Challenges and Considerations

Numerous challenges exist when it comes to host system design, and it often comes down to carefully managing data flow to ensure sufficient steady state bandwidth for the data you are looking to transmit or receive. For the host system, the CPU and memory, the storage devices, the NIC, and the software interface are crucial to handle the data being sent to and from the SDR.

2.1. Challenges of Streaming from SDR to Host System (Rx)

Because the sending (and receiving) of data from the Ethernet link is performed by the FPGA, it’s nominally guaranteed to be deterministic and synchronous within its clock domain. This is because data ingress from the analog-to-digital convertor (ADC) is determined by sample rate, and is thus uniform and monotonic.

However, as the data moves between the FPGA-based SDR to the host system, it crosses clock domains from the synchronous FPGA clock domain to the clock domains associated with the host system CPUs. As a result, latency and jitter management requires careful consideration to ensure not only sufficient throughput, but also sufficient polling rate so that data isn’t silently dropped at the NIC or within the kernel networking buffers.

Another difficult task is to ensure sufficient sustained write speed from memory to disk when capturing many channels over a wide bandwidth, for instance.

2.2. Challenges of Streaming from Host System to SDR (Tx)

Transmit operation is actually a more difficult task, as mantaining a consistent buffer level places strong jitter and latency demands on the blocking send call (using flow control ). Once again, the kernel must not only be able to send data at a sufficiently fast rate to the NIC, but it must also send this data consistently, to help minimize latency variations greater than the on board SDR Tx buffer. Latency variations greater than the FPGA buffer size would otherwise cause the FPGA to run out of samples and underflow. The NIC also needs to be able to handle the data flow, and have peripheral components in the host system which can send data to the NIC fast enough to fill the buffer of the FPGA.

Similarly to receive operations, careful attention must be paid to ensure that the data flow, from disk, to CPU, to NIC, not only meets sustained throughput requirements, but also low latency variation requirements throughout the transmit network stack.

2.3. Considerations Regarding Network Interface Cards (NICs)

Network interfaces cards (NICs), like most reasonable peripheral devices, are capable of interrupting the CPU whenever a packet arrives. However, in our SDRs, the amount of packets being sent may sometimes overwhelm the processor with interrupt-handling work. So we use a polling approach to poll the data on an ongoing basis. There are a number of advantages to doing things this way: vast numbers of interrupts can be avoided, incoming packets can be more efficiently processed in batches, and, if packets must be dropped in response to load, they can be discarded in the interface. Polling is thus a win for our SDRs which involve a lot of data. However, this sometimes requires dedicated FPGA cards with sufficiently large on-board buffers to avoid dropped packets.

Of course, transferring data to and from the NIC and SDR is essential for proper Rx and Tx functionality. For our Crimson TNG SDR, the NICs must be able to support 10Gbps Ethernet, and for Cyan, these must be able to support anywhere from 40Gbps to 100Gbps Ethernet, depending on the configuration. Moreover, these NICs must be able to send data to the CPU at high rates. This is where NICs that use a PCIe bus interface are essential. In more high performance situations, an FPGA based accelerated NIC is also beneficial. This will be discussed in section 3.

2.4. Considerations Regarding RAM

For optimal performance of our host system and thus SDR, the use of DDR4 SD RAM is important. DDR SDRAM access is twice as fast as SDRAM, because DDR data transfers occur on both edges of the clock signal as compared to SDRAM, which transfers data only on the rising edge of a clock. Another benefit of using both edges is that memory can run at a lower clock rate using less energy and achieve faster speeds. Moreover, it’s possible to have up to 64GB of RAM on one DDR4 SDRAM chip, which is important for a number of applications, especially those Tx related.

It is particularly important to have a large amount of memory available for SDRs due to the amount of data needing to be handled at a high data rate ( 1.6 - 3.2 Gbps ) between memory and CPU. For instance, having a waveform made of IQ sample data, which is several seconds long and needing to be streamed at 1 - 3 Gigasamples/second to then be transmitted over the radio front end, is a nontrivial matter.

Also, ensuring that memory is correctly populated to ensure the greatest number of channels (ie; correctly populating RAM slotis also important to ensuring throughput.

2.5. Considerations Regarding CPU

The host system must be able to keep up with the SDR platform to process all the data that’s being sent to it or process all the data required to be sent from the CPU to the NICs to the SDR. To this end, the primary consideration of the CPU relates to the number of available cores, supported PCIe architecture, and processor speed. To provide some abstract numbers, supporting full-rate capture applications at 100 Gb/s generally requires around six to 12 cores, and a minimum of 32 PCIe v4.0 lanes (x16 lanes to support the NIC, and another 4x4 lanes to support NVMe drives).

For multiprocessor systems, it’s critical to ensure proper core affinity between the NIC and storage drives. Care must be taken to specify core and process affinities to avoid unnecessary transfers across PCIe root complexes hosted by different CPUs. For host machines with multiple CPUs, this often requires reading the motherboard manual to determine the distribution of PCIe root complexes and ensuring that they’re correctly distributed to the various processors.

2.6. Considerations Regarding Hard Drives

PCIe, short for Peripheral Component Interconnect Express, is a standard bus interface for high-performance solid state drives (SSDs). When your computer first boots, PCIe is what determines the devices that are attached or plugged into the motherboard. It identifies the links between each device, creates a traffic map, and negotiates the bandwidth of each link. In the case of SDR host systems, particular care needs to be taken to ensure sufficient PCI Express (PCIe) bandwidth between storage devices, CPUs, and NICs. Non-Volatile Memory Express, short for NVMe, is a communication transfer protocol that runs on top of transfer interfaces such as PCIe. NVMe is a new technology standard purposely built for blazing-fast access to PCIe SSDs. It is a new protocol alternative to AHCI/SATA and the SCSI protocol used by SAS.

Today’s NVMe (PCIe4 SSDs) tend to be four-lane devices with mean throughput around 1.8 to 2.2 Gb/s. Avoid NVMe that uses caching because their mean throughputs are lower when the drive is full. The storage systems must have enough capacity to record the data continuously. This can be challenging, as recording 10 minutes of RF data streaming at 100 Gb/s requires over 7 TB of storage capacity.

Given the importance of matching the throughput of the entire system with each component, it’s worth considering that a single NVMe drive supports approximately 16 Gb/s. If the throughput from the NIC is greater than that, as in the case of 100-Gb/s operation, it’s necessary to split up writes across various devices. Particularly for high-speed operation, software RAID configurations are discouraged in favour of direct access to the devices, or, if absolutely required, hardware RAID support. This allows several slower devices to be aggregated together. By carefully measuring the bandwidth associated with each storage device and matching that with the bandwidth of the NICs, you can ensure sufficient capacity to effectively read and write data from the storage devices.

In addition to considering IO speed, we also need to consider file systems. High-performing file systems include XFS or EXT4. These file systems are reliable and quickly implement common disk operations.

2.7. Considerations Regarding Software Defined Networking

Operating-system kernels handle networking through their network stack. This stack is good enough for most applications, but when it comes to high-throughput systems, it’s simply too slow. This problem is compounded by the fact that network speeds are increasing while the network stack can’t keep up.

To solve this issue, the kernel network stack can be bypassed with other software networking tools such as DPDK. Bypassing the kernel network stack also allows for new technologies to be implemented without needing to change core operating-system kernel code.Kernel bypass stacks move protocol processing to the user space. This lets applications directly access and handle their own networking packets and increases throughput. It’s a very important consideration for the host system, since using the kernel stack would defeat the purpose of designing the hardware components to handle a certain throughput. For this reason, employing a kernel bypass stack is vital in the operation of a high-throughput SDR system.

3. Host System Selection for Our SDRs

A variety of challenges and considerations exist regarding host system performance, as discussed above. Below we outline some COTS components that ensure good performance of a host system connected to our SDRs (both Crimson TNG and Cyan). For the host system, the CPU and memory, the storage devices, the NIC, and the software interface are crucial to handle the data being sent by the SDR.

Note

These are just rough guidelines for devices tested to work with our SDR products. You may need to design and customize your host system in order to ensure optimal performance for the intended application. For more information on how we can recommend or design a system to best meet your needs, [contact us](mailto:solutions@pervices.com).

3.1. Selecting an Operating System (OS)

Different performance can be expected between the operating systems we use our software on (GNU Radio and UHD). We strongly suggest using an Arch Linux OS, as this is what we develop on and use in our labs. Other OS we support include Ubuntu and Centos, but these are not optimal for high performance, i.e. high sample rates and high bandwidths.

Note

For installation of GNU Radio and UHD for your specific OS distribution, instructions are available at:

[How to Set Up UHD and GNU Radio](../../how-to/pvht-3-softwaresetup)

3.2. Selecting a Network Interface Card (NIC)

The NIC facilitates the connection between the SDR platform and the host system. There are two common NIC solutions: commodity adapters and specialized FPGA adapters. Though many commodity adapters on the market can handle up to 100-Gb/s throughput, it’s not under every traffic condition. FPGA-based adapters have an edge with the primary benefit being large buffers (in the form of onboard memory) that support 100% packet-capture operations. Some FPGA acceleration also helps reduce protocol overhead as larger chunks of data can be transferred per operation. In addition, FPGA adapters are able to aggregate traffic in hardware at line rates as opposed to commodity adapters. In most cases, there’s a substantial benefit to using FPGA-accelerated NICs. As well, it is essential that these NICs use the PCIe bus connects for the data throughput necessary during Tx and Rx functionality.

Note

NICS and fibre specifications tested for Per Vices SDRs are:

[Crimson TNG NICs and Fibre Requirements](../../crimson/specs/#network-interface-card-requirements)

[Cyan NICs and Fibre Requirements](../../cyan/specs/#network-interface-card-nic-requirements)

You will also want to ensure that the network card supports DPDK. This is a library that allows userspace programs offload TCP packet processing from the operating system kernel. This offloading achieves higher computing efficiency and higher packet throughput than is possible using the interrupt-driven processing provided in the kernel. DPDK provides a programming framework for x86, ARM, and PowerPC instruction set architecture type processors and enables faster development of high speed data packet networking applications. This platform is primarily valuable in high performance host system’s connected to our Cyan platforms.

3.3. Selecting RAM

The host system should also possess a memory controller that can support error-correcting codes (ECC) to maintain data integrity. Similar to the SDR platform, ensuring ample memory size is useful as is maximizing memory bandwidth by populating all available memory slots. This also ensures efficient utilization of available direct-memory-access (DMA) controller resources and maximizes memory bus size and throughput.

Note

The recommended RAM specifications are:

Crimson: 64-128 GB, DDR4 SDRAM

Cyan: 512 GB, DDR4-3200 SDRAM

3.4. Selecting a CPU

Important to the operation of the host system’s CPU are the number of available cores, supported PCIe architecture, and processor speed. The required CPU will vary greatly depending on how much data throughput is required, as discussed in subsection 2.5.

Note

The minimal recommended CPU specifications are:

Crimson: 64-bit, 4-core, 8 threads, 3.5-4.5 GHz

Cyan: 64-bit, 16-core, 32 threads, 3.5-4.5 GHz

3.5 Selecting Hard Drives: NVMe (SSD)

As mentioned, Non-Volatile Memory Express, short for NVMe, is a communication transfer protocol specially designed for accessing high-speed storage media devices, such as flash and next-gen SSDs. For rapid IO of large volumes of data and satisfying the requirement of maximum throughput, PCIe4-based solid-state drives (SSDs) can be utilized. Compared to SATA SSDs, NVMe SSDs can generally reach higher sustained write speeds. Requirements for this are largely application dependent, and thus, we don’t have any specification recommendations here.

4. Example of Host System for High-speed Data streaming and recording

As an example, if we consider streaming 8 channels of IQ data at 1GBPS and recording the data, the host system would have the following specs:

- Server type system running Arch Linux

- 2x CPU with 16-core, 32 thread, 3.9 GHz

- 32x 16 GB, DDR4-3200 ECC LP RDIMM RAM

- SATA/SAS, PCIe storage controllers

- 100GBASE-R NICs, with QSFP28 network ports, onboard SDRAM, supports DPDK

5. Conclusion

Above, we have discussed the challenges and considerations regarding host system data throughput performance, as well as the recommendations concerning these matters. It is not a one-size-fits-all situation when designing host systems. This largely will depend on what you plan to do with our SDRs. Moreover, it is very challenging configuring such host systems for extremely high data throughput and high-speed storage of data. To this end, it is best to discuss with us options for a host system when you have determined your exact application and requirements for what you’re transmitting, receiving, and what you plan on doing with the data. For more information, please contact us.